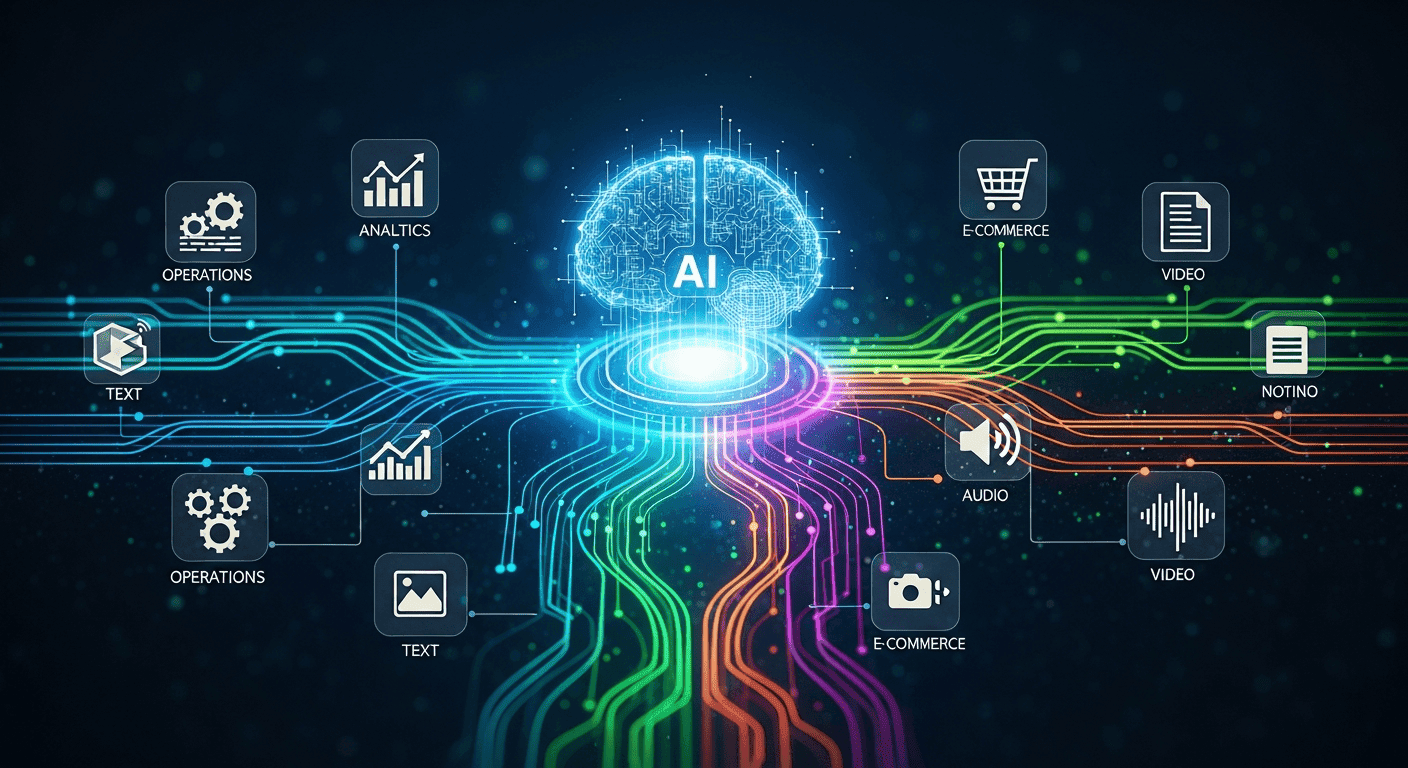

In 2025–26, the landscape of artificial intelligence is rapidly evolving beyond single-modality models into robust multimodal AI tools and integration platforms that enable enterprises to harness diverse data sources — text, image, audio, and video — in unified workflows. These technologies are not only enhancing the capability of AI systems to understand and act on complex information, but they are also breaking down traditional silos between disparate systems by enabling seamless integration and automation across organizational functions. As businesses adopt these tools, they face both strategic opportunity and operational complexity.

Multimodal AI models such as Google’s Gemini family lead this shift by natively interpreting diverse data types with unified processing, enabling richer analytical and decisioning capabilities. Integration platforms that embed multimodal AI extend these capabilities further, allowing organizations to create automated pipelines that span from customer service and compliance to content generation and operational analytics. The convergence of multimodal intelligence with powerful integration platforms is shaping a new era where AI augments human workflows more comprehensively than ever before, promoting efficiency, reducing context switching, and unlocking value from previously untapped data sources.

Background & Context

The journey toward multimodal AI and integrated platforms began with the rise of large language models like OpenAI’s GPT family and Google’s multimodal offerings that augmented text capabilities with visual and audio inputs. Early enterprise adoption concentrated on isolated use cases, such as chatbots and image analysis tools. However, the paradigm expanded as organizations began to demand systems capable of processing and reasoning across multiple data forms simultaneously — a requirement that reflects how humans naturally perceive and interpret information. Multimodal AI emerged to answer this demand with architectures designed to analyze text, images, video, audio, and structured data within unified contexts.

Integration platforms — historically focused on delivering connectivity between applications — have likewise evolved. With AI embedded into these platforms, businesses can now automate data movement, transform diverse inputs, and operationalize AI insights without extensive coding expertise. This hybrid evolution is driving the adoption of tools that support entire AI-enabled workflows rather than isolated model predictions.

Expert Quotes / Voices

Industry experts argue that the convergence of multimodal AI and integration platforms is a defining trend for enterprise technology in 2026. Tech leaders emphasize that the ability to integrate AI reasoning across data types and system boundaries is a critical factor for competitive advantage. Market analysts observe that AI adoption has shifted from experiments to scalable systems, with a growing share of organizations embedding AI agents and multimodal intelligence into core business processes to unlock efficiency and insight at scale.

Market / Industry Comparisons

While traditional AI models focused on narrow tasks such as text summarization or image classification, next-generation multimodal tools — including Google’s Gemini and other emerging systems — offer comprehensive understanding and generation across multiple data modalities. These capabilities position them ahead of earlier tools that lacked context-rich reasoning. Integration platforms now embed such AI capabilities to streamline tasks from data transformation to predictive analytics, unlike legacy enterprise software that required manual integration scripts or extensive middleware.

A growing ecosystem of platforms, including unified workspaces and multimodal model services, competes in this space. They differ in deployment models, integration capabilities, and support for enterprise workflows, but collectively they illustrate the rapid maturation of this sector as it moves from proof-of-concept to production readiness.

Implications & Why It Matters

The implications of multimodal AI tools and integration platforms span multiple organizational layers:

- Productivity Gains: By automating complex tasks that span multiple data types and systems, enterprises can dramatically reduce manual workload and context switching.

- Richer Insights: Multimodal AI enables deeper analysis by combining text, visual, and audio data, resulting in more contextually aware decision support.

- Streamlined Workflows: Integration platforms empower organizations to embed AI directly into business processes, reducing friction between tools and data sources.

These shifts not only improve internal efficiency but also enhance customer experiences, as AI can deliver more personalized and comprehensive interactions based on richer data interpretation.

What’s Next

Looking ahead, the integration of multimodal AI with enterprise systems is expected to accelerate, with developments in agentic AI and context-aware automation further enhancing platform capabilities. Standards and protocols that enable interoperability, such as open frameworks for model context sharing, will play a vital role in shaping future architectures. The evolution of these technologies will likely catalyze new classes of applications — from intelligent automation assistants to next-generation analytics engines that require minimal human intervention while maintaining high trust and governance standards.

Pros and Cons

Advantages

- Unified data understanding across text, vision, audio, and other modalities.

- Streamlined integration reduces reliance on fragmented legacy systems.

- Scalable automation supports enterprise-wide workflows.

Limitations

- Complexity of implementation may require specialized expertise.

- Infrastructure demands can be significant for real-time multimodal processing.

- Governance and explainability remain ongoing challenges with rich, cross-modal models.

Our Take

The rise of multimodal AI tools and integration platforms represents a strategic inflection point for enterprise technology. Businesses that adopt these systems can achieve deeper insights, more seamless operations, and significant efficiency gains. However, the pace of integration must be balanced with governance and infrastructure readiness.

Wrap-Up

As enterprises continue to embrace AI that understands and acts on multiple forms of data, multimodal tools and integration platforms are becoming indispensable building blocks of modern digital workflows. The next wave of innovation will focus on tightening integration, enhancing reasoning across contexts, and making these technologies more accessible and trustworthy — driving broad transformation across industries.

Sources

Reuters – Meta releases new multimodal models driving enterprise AI development - https://www.reuters.com/technology/meta-releases-new-ai-model-llama-4-2025-04-05

TrnDigital – How multimodal AI is optimizing enterprise workflows - https://www.trndigital.com/blog/multimodal-ai-in-enterprise-workflows-leveraging-text-image-video-audio/

Google Cloud – Multimodal AI use cases and integration capabilities - https://cloud.google.com/use-cases/multimodal-ai

Anthropic – Model Context Protocol open standard for AI integration - https://en.wikipedia.org/wiki/Model_Context_Protocol